How to Enhance Process Efficiency: A Best Practice Guide to Utilizing Automation Solutions

Keywords process efficiency, automation, customized solutions

Thanks to the great breakthrough of AI and automation technology, businesses of all sizes utilize AI and automate processes to achieve efficiency by transforming tedious manual tasks into streamlined operations. While tools like Zapier, Power Automate, and Hive have revolutionized routine workflows with trigger-based automation, sometimes the one-size-fits-all approach needs to be revised in handling unique, one-off tasks that demand a more tailored solution.

The Challenge

Consider the time-intensive task of manually downloading and categorizing hundreds of resources from a website—a process that not only drains hours but also introduces the risk of human error. The existing web scraping tools won't work their charms here as we are not scraping data directly from the website but downloading resources from the website, which usually asks for user interactions (e.g., clicking on a button on the webpage that would trigger a JavaScript event listener and start the downloading process).

This scenario underscores a gap in the market: the need for bespoke automation capable of adapting to specific, non-recurring tasks.

Our Solution

* We advocate for ethical web scraping and automation, ensuring all our solutions comply with legal standards and respect data privacy and website terms of service. Please visit robots.txt of the website you'd like to scrape and comply with the requirements before utilizing the automation.

To see the key takeaways, skip to the end of this article.

In this post, we will introduce you to a solution for this type of challenge. We wrote a Python script using Selenium, an open-source Python library that is utilized for dynamic online interaction and browser automation. Selenium is widely used for automated testing of a web application. As you will observe later, it is also very powerful to streamline any repetitive processes that include interacting with a website or web application – for instance, grab screenshots of the click-to-open rate performance of all the marketing emails sent to your customers, download and export data from websites, explore and extract data from webpages that are deep linked that require navigating between pages, to name a few.

Below is a step-by-step guide based on a case study, where we will show you the process of creating a sophisticated bot programmed to navigate a website automatically and autonomously, download free resources, and intelligently categorize them into designated directories—a task previously consuming several human hours, now flawlessly executed in the background with zero supervision.

Suppose you want to download a few free template resources from the 365 data science resource website in bulk. You visited the website and noticed that there are ten pages of resources. Given that approximately 20 templates are shown on each page, you quickly realize that there are around 200 templates scattered on their separate landing pages for you to download.

Technical Breakdown

Step 1: Explore and understand the structure of the web application and the user journey when navigating between pages.

In this case, we know that we would take the following few steps before we managed to download one of the templates from the source (feel free to follow the steps to explore the navigation yourself):

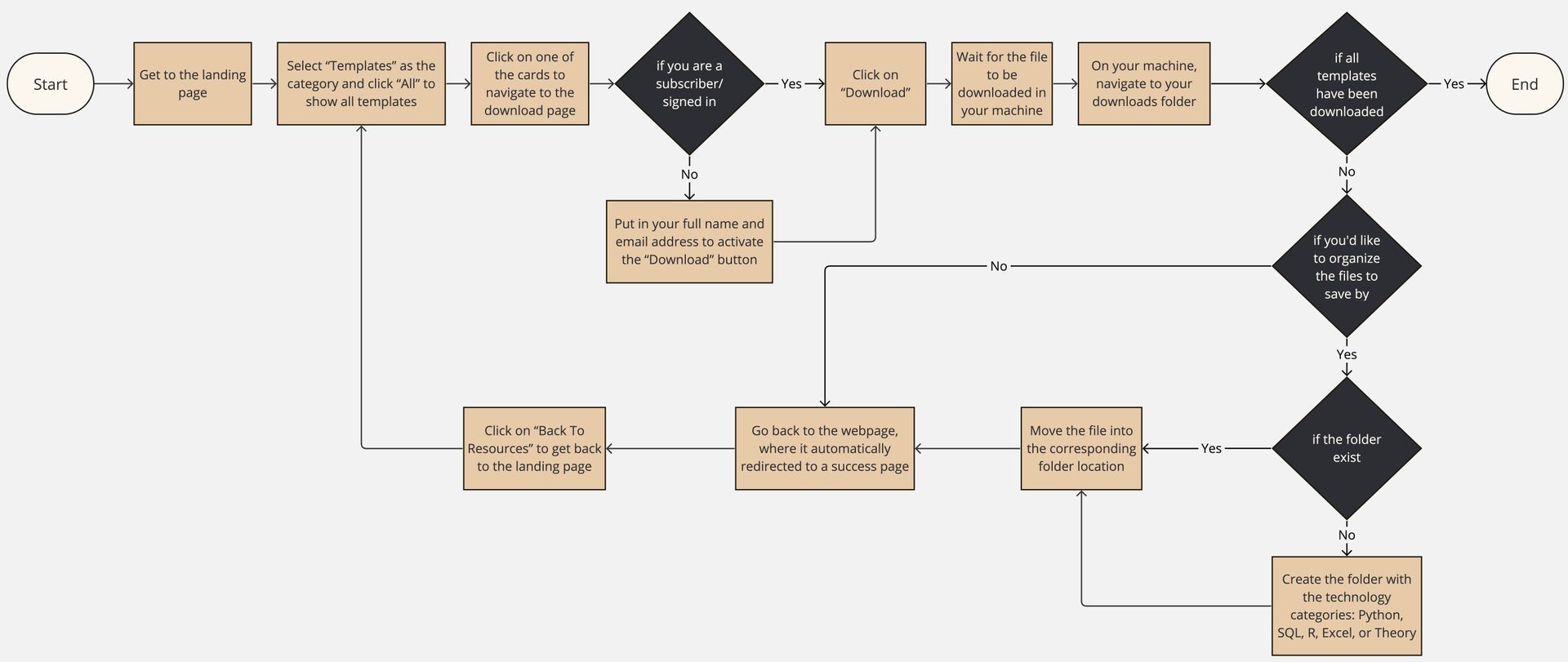

The flow chart of the steps

- Get to the landing page;

- Select “Templates” as the category and click “All” to show all templates;

- Click on one of the cards to navigate to the download page;

- Put in your full name and email address to activate the “Download” button if you are not a subscriber or not signed in;

- Click on “Download”;

- Wait for the file to be downloaded on your machine;

- On your machine, navigate to your downloads folder;

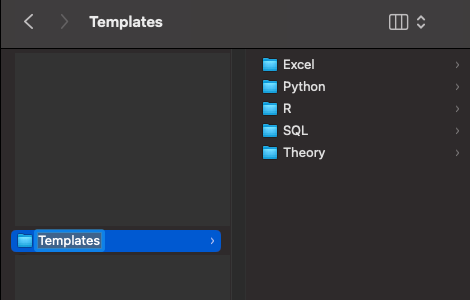

- If you want to organized the files to save by categories, create the folder with the technology categories: Python, SQL, R, Excel, or Theory;

- Move the file into the corresponding folder location;

- Go back to the webpage, where it automatically redirected to a success page;

- Click on “Back To Resources” to get back to the landing page;

- Repeat until all templates are downloaded and moved to the corresponding folder directories.

Step 2: Figure out the key steps and structures as a blueprint for coding the project automation.

We notice that to automate the whole process, the key subprocesses we need to automate are as follows:

- Go to the landing page and find the link to the download page for the template;

- Navigate to the download page for each template and input the same full name and email information to download the file;

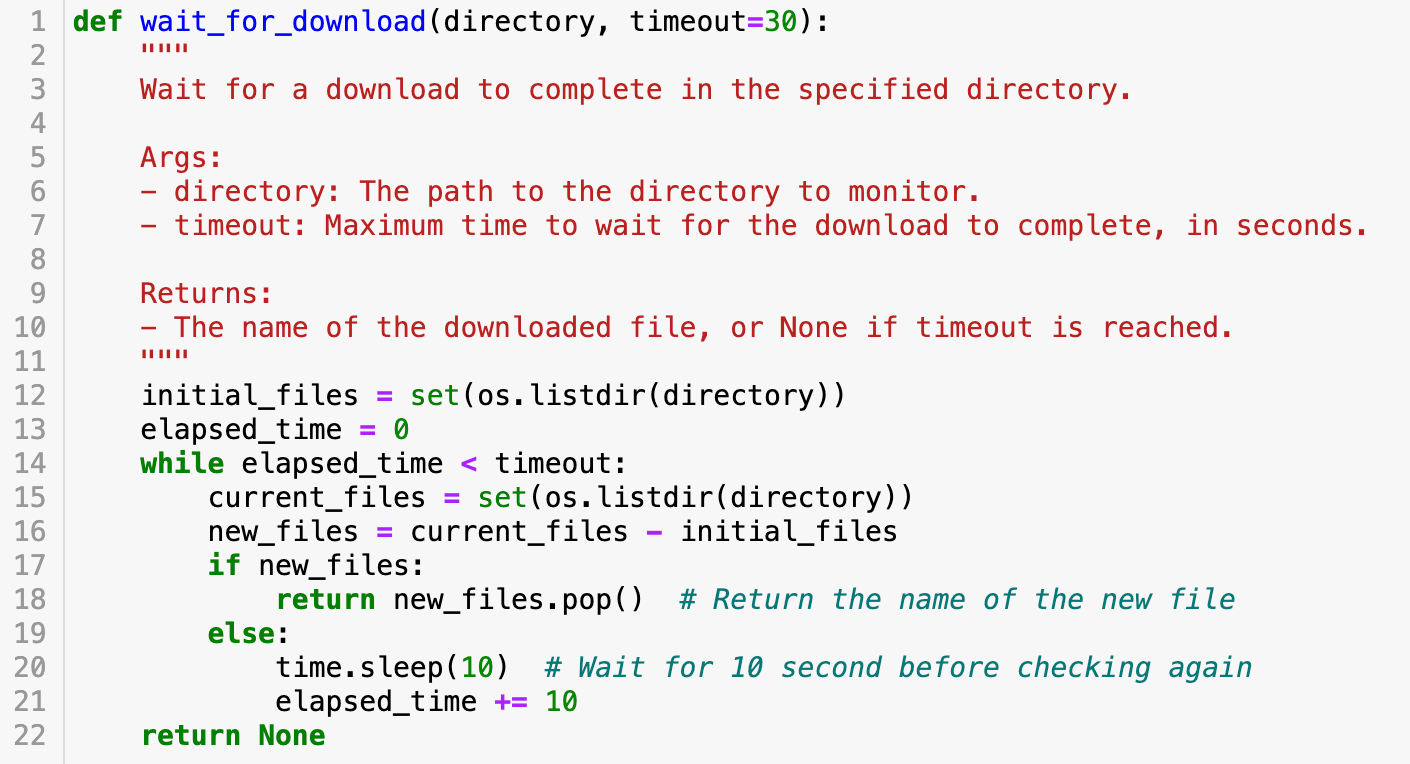

- Wait for the file to download and move the file from the download folder to a specific directory.

Step 3: Restructure the sub-processes to improve efficiency for the bot we are going to create.

We soon find that we are repeating to navigate back to the main templates page after each download, and we could restructure this part by using a more efficient method: store the URLs of all templates first, and then navigate to each one without going back to the main page.

Step 4: Now, let’s code, shall we?

You may find the original code in this GitHub repository

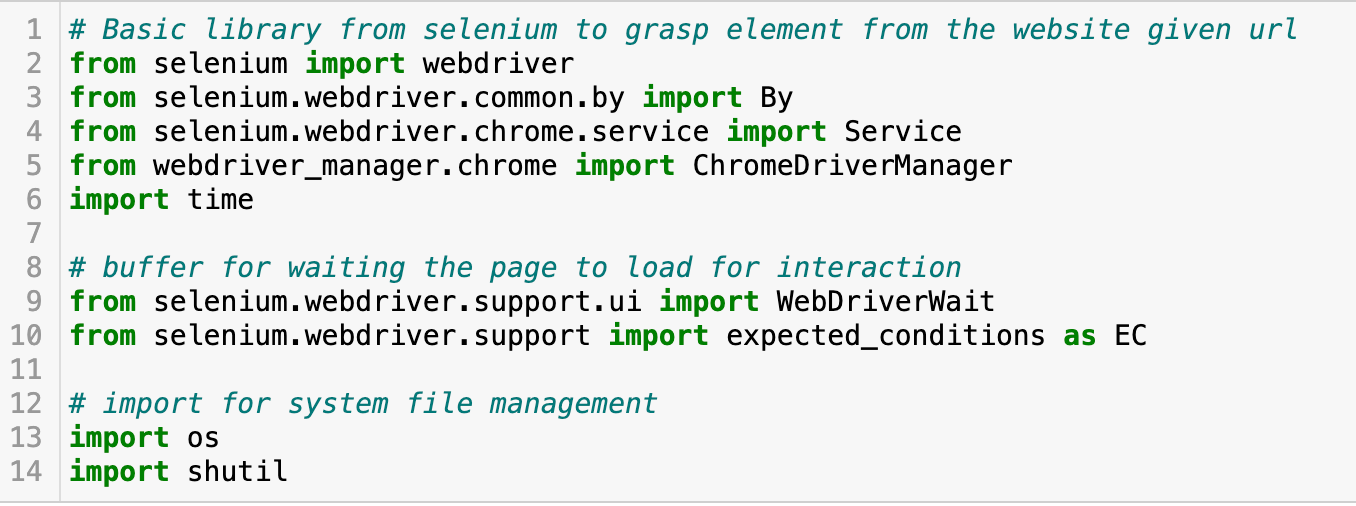

First, let’s import the libraries.

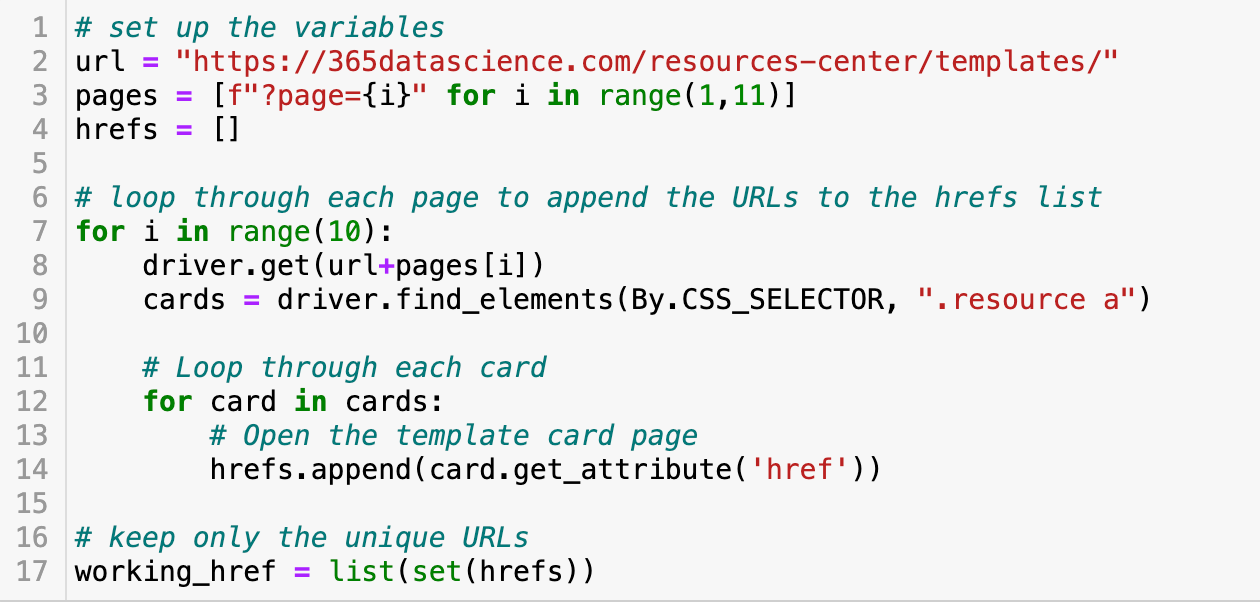

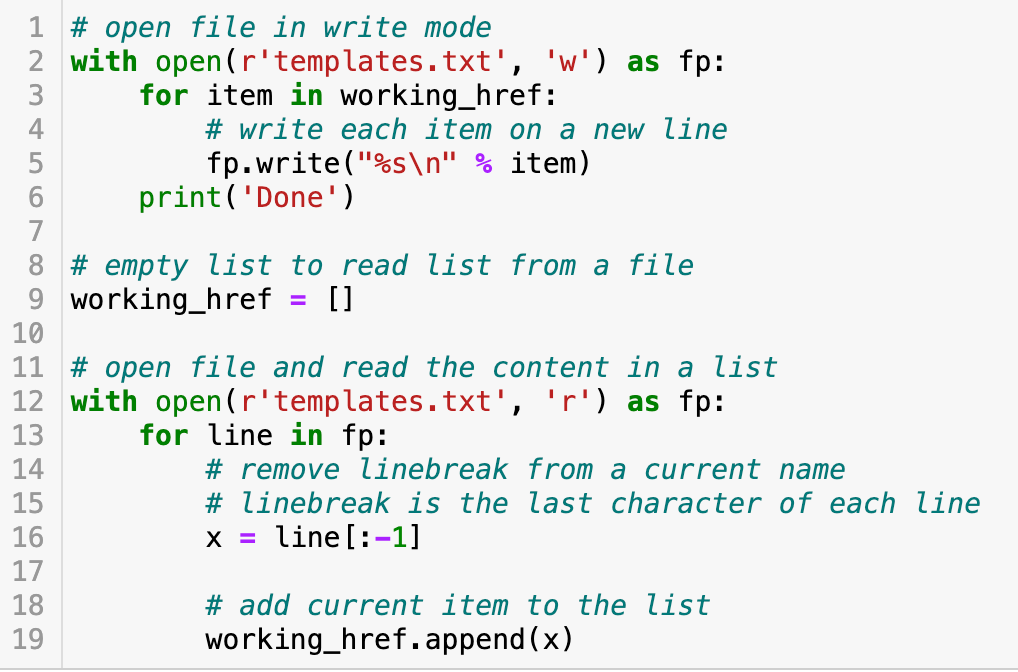

Start with collecting the list of URLs of the download page of each template so that we can have the robot visit the separate pages directly to download the file without having to get back to the landing page each time.

- Here, we can even take extra steps to save the lists into a text file for future reference. In this way, we won't need the robot to crawl the websites again if we shut down the program and want to regain the list.

By running the following code, you’ll get the number of templates (which would give 181) we are going to download using the bot.

len(working_href)

Define a file moving function - given that there will be around 200 templates to be downloaded and things can get messy quickly if we do not organize the downloaded file, let’s define a function that could help us move the files from the downloads folder given the directory we specified:

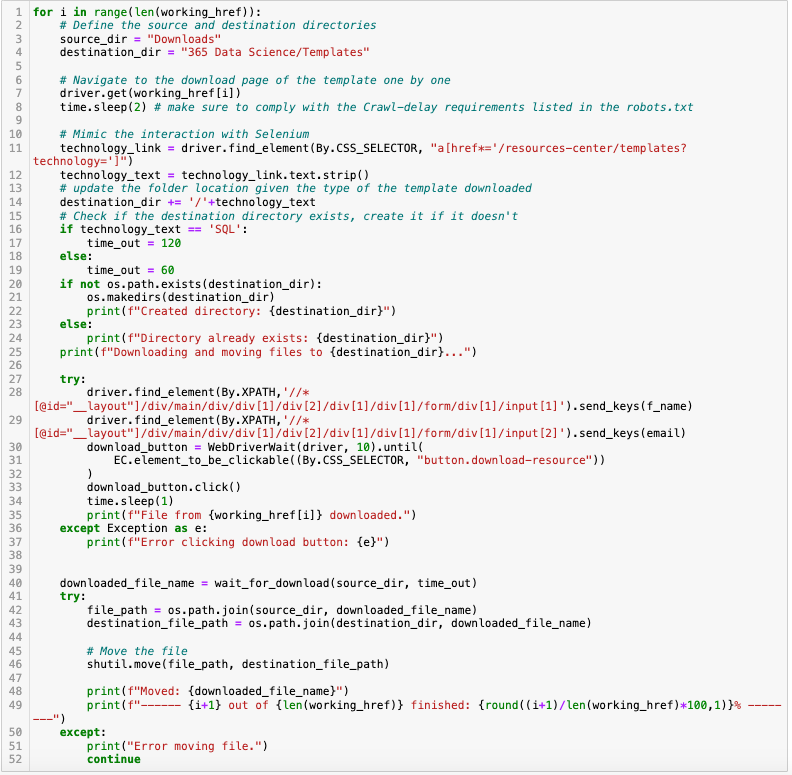

Now comes the core part of our automation script: let’s leverage the Selenium WebDriver to mimics human interaction with the web pages (URLs list we prepared earlier), from navigating links to executing downloads.

Close the driver once the program is done.

Benefits Realized

The outcome? The automation script requires no monitoring and will run in the background for approximately 3-4 hours in the background once you execute the script - A dramatic reduction in manual effort and time, with the entire operation running seamlessly in the background.

The folder structure with downloaded files built by the bot

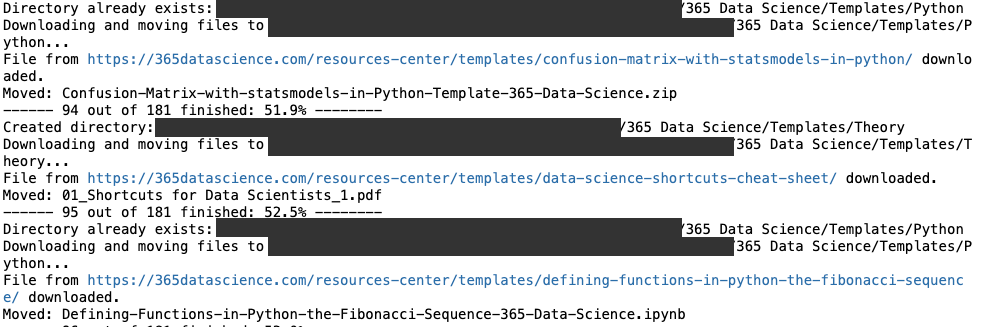

In the meantime, you can always check the progress of the automation program, as we have printed out the steps the bot is taking (navigating to the download page, downloading and waiting for the downloaded file, or moving the file to the directory), and how much percentage of work it has finished. It's like you're checking in with your virtual assistant, sharing any updates it has for your project. While freeing your hands, you still have complete control of the progress without having to stare at the screen.

Example of updates given by the bot that help you track the progress

Limits

Sometimes, it'd take more than 2 minutes for your machine to complete the download, and it would affect the file moving sub-process for that specific template. While it rarely happens, for the handful of cases, it will be easy for you to move the file to the corresponding location after the bot completes the tasks with a structured directory already built for you. Note that you would get a message saying, "Error moving file," but the flow is uninterrupted as the code was designed to skip it when an error occurs. Feel free to examine and improve the code we shared on GitHub here for more efficient performance, but given the project's size and purpose (hands-free!), this level of efficiency is sufficient.

This case study not only highlights the efficiency and accuracy of our custom-built automation but also its versatility in addressing specific, one-time automation challenges that off-the-shelf tools cannot. Or it would probably take the same amount of time to figure out and set up the customization before you can use the tool for your specific purposes.

At CustomerX, we go beyond conventional automation to offer customized solutions that align with your unique operational challenges whether a one-off task or an ongoing process, our bespoke automation services are designed to deliver unmatched efficiency and precision.

Schedule a 1-on-1 now to learn more about our services and how we can tailor a solution that fits your business needs perfectly.

Key Takeaways

- We advocate for ethical web scraping and automation, ensuring all our solutions comply with legal standards and respect data privacy and website terms of service. Please visit robots.txt of the website you'd like to scrape and comply with the requirements before utilizing the automation.

- While tools like Zapier, Power Automate, and Hive have revolutionized routine workflows with trigger-based automation, sometimes the one-size-fits-all approach falls short in handling unique, one-off tasks that demand a more tailored solution.

- In the post, we discussed a common automation challenge - manual downloading and categorizing of resources from websites, a time-intensive task prone to human error, underscoring the need for bespoke automation solutions.

- We walked through the steps of creating a customized automation solution to create sophisticated bots that can autonomously navigate websites, download resources, and categorize them, significantly reducing the manual effort and time required.

- Explore and understand the structure of the web application and the user journey of your project;

- Figure out the key steps and sub-processes as a blueprint for coding the project automation;

- Restructure the sub-processes to improve efficiency for the bot when necessary;

- Code the solution given the blueprint.

- Sometimes, the solution doesn't need to be perfect. To save time, lower cost, and have the solution work efficiently for your specific case, we suggest not spending extra time optimizing functionalities that are not key to your purposes.

SHARE THIS BLOG